Wearable device can help people regain their voice function with 95 percent accuracy

A team of engineers from US-based university UCLA have invented a small, thin, and flexible assistive device that adheres to the neck and translates the muscle movements of the larynx into audible speech.

The wearable technology is designed to help people vocal cord impairments regain their voice function, such as those with pathological vocal cord conditions or individuals who are recovering from laryngeal cancer surgeries.

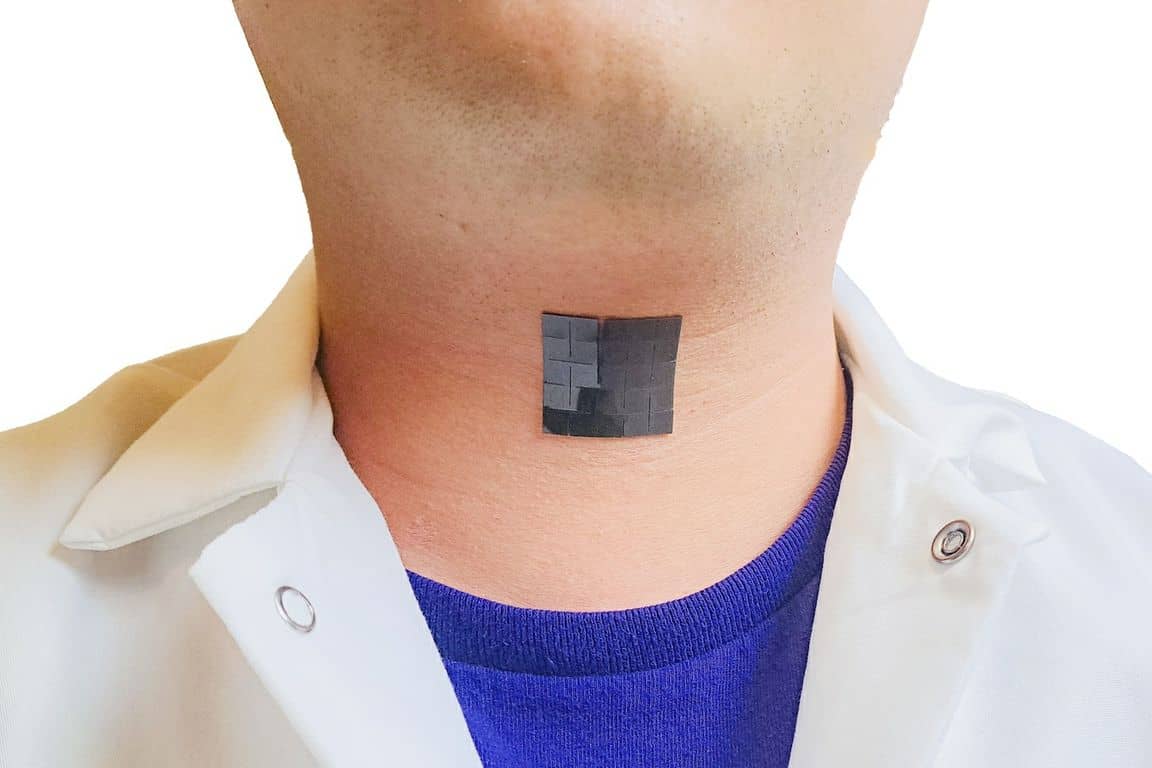

Measuring just over one square inch, the soft and stretchy device is attached to the skin outside the throat. It is designed to be flexible enough to move with and capture the activity of laryngeal muscles beneath the skin.

The device is lightweight, weighing around seven grams, and is 0.06 inches thick.

The technology, developed by Jun Chen, an assistant professor of bioengineering at the UCLA Samueli School of Engineering, and his colleagues, is able to detect movement in a person’s larynx muscles and translate those signals into audible speech with the assistance of machine-learning technology — with nearly 95 percent accuracy.

“Existing solutions such as handheld electro-larynx devices and tracheoesophageal- puncture procedures can be inconvenient, invasive or uncomfortable,” Jun said. “This new device presents a wearable, non-invasive option capable of assisting patients in communicating during the period before treatment and during the post-treatment recovery period for voice disorders.”

The two components — and five layers — of the device allow it to turn muscle movement into electrical signals which, with the help of machine learning, are ultimately converted into speech signals and audible vocal expression.

In their experiments, the researchers tested the wearable technology on eight healthy adults. They collected data on laryngeal muscle movement and used a machine-learning algorithm to correlate the resulting signals to certain words. They then selected a corresponding output voice signal through the device’s actuation component.

The research team demonstrated the system’s accuracy by having the participants pronounce five sentences — both aloud and voicelessly — including “Hi, Rachel, how are you doing today?” and “I love you!”

The overall prediction accuracy of the model was 94.68 percent, with the participants’ voice signal amplified by the actuation component, demonstrating that the sensing mechanism recognised their laryngeal movement signal and matched the corresponding sentence the participants wished to say.

Going forward, the research team plans to continue enlarging the vocabulary of the device through machine learning and to test it in people with speech disorders.

The research is published in the journal Nature Communications.

At Microsoft’s recent Ability Summit, the firm previewed some of its ongoing work to use Custom Neural Voice to empower people with ALS and other speech disabilities to have their own voice. This voice banking technology is designed to capture the intonations, tone, and emotion of human voices to make computerised voices sound more personalised and help individuals express their personality and identity.